Segmentation Faults

For some time Harvest had been struggling with unsolved segmentation faults. I’d like to tell everyone the story of this journey. But first…

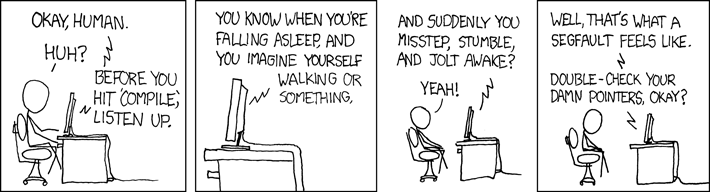

What is a segmentation fault?

Segmentation faults, or segfaults as we like to call them, are the errors that happen when a process tries to access memory it cannot physically address. Wait, what? Addressing memory? I thought I was working with Ruby and didn’t have to take care of that!?

It turns out the Ruby interpreter itself has bugs (surprise!). Gems that have C extensions must worry about segfaults as well.

What was happening?

A segfault is different from other kinds of errors, because they’re so terrible that they kill the process. I mean, if things get so messy in the inside that you’re accessing memory you shouldn’t, the best you can do is stop doing what you’re doing so you don’t make it worse.

When the process dies it is usually not sent to whatever exception tracking software you use. In our case, it’s Passenger that notices the segfaults and dumps the error that Ruby returns into the passenger logs.

We were aware of the segfaults, but not being on our exception tracker made them very easy to ignore. That is until we started using New Relic and lots of exceptions started happening.

Exceptions?

But you said segfaults didn’t cause exceptions, they just killed the process? You’re right my friend, but let me get to the point.

What we saw on our exception tracker was a lot of exceptions happening within a three-minute period, all crashing on the same line of code in the newrelic_rpm gem. The first suspicion, of course, was a bug in that gem. We opened a support ticket with New Relic immediately and they started investigating. On our side, we discovered that the errors happened on the same server and, with further debugging, on the same process.

The way New Relic works is it first spawns a parallel thread together with the Rails process. This process sends instrumentation data to New Relic. Since New Relic is a separate process, it cannot affect the performance of the primary Rails process.

What we saw on the New Relic logs is that an agent would spawn, only to cause an exception a few minutes later and keep causing exceptions until yet another few minutes later it would stop. We have configured passenger to have every Rails process respond only to a limited amount of requests, which explained this limited lifetime pattern we saw.

The exceptions looked different on almost all these incidents, but they would repeat until the agent stopped. Here are some examples:

ERROR : Caught exception in trace_execution_scoped header.

ERROR : NoMethodError: undefined method `<' for nil:NilClass

ERROR : Caught exception in trace_execution_scoped header.

ERROR : NoMethodError: undefined method `count_segments' for false:FalseClass

ERROR : Caught exception in trace_method_execution footer.

ERROR : NoMethodError: undefined method `tag' for #<Array:0x007f70a3c7bbe8>

On their side, Ben from New Relic pointed to a very funny exception that did get tracked: NotImplementedError: method 'method_missing' called on unexpected T_NONE object (0x007ffa15822ca8 flags=0x400 klass=0x0). According to his analysis, this is a theoretically impossible combination of flags in Ruby Land.

Stuff that shouldn’t be false

That exception got me thinking, wait, something is corrupted without causing a segfault?

I’ve been checking our exceptions daily for a few months, and let me tell you, the amount of activity in there was suspicious. Here are some example exceptions:

TypeError: false is not a symbolNoMethodError: undefined method 'keys' for nil:NilClass(This error happened on controller#action calledfalse#false)TypeError: can't convert false into StringNoMethodError: undefined method 'zero?' for false:FalseClass

I think every Ruby developer is used to the NoMethodError’s on nil. I mean, it’s the Ruby version of an Uncaught Exception in Java. You see it almost every day in your work life. But NoMethodError’s on false? It’s really not that easy to get a false by mistake.

Corruption

At the end, the data pointed to things getting corrupted. Random variables seemed to become values that weren’t right. All of it sprinkled with a healthy amount of segfaults.

My gut feeling was that it all had to be the same, but what can be done about it?

The (only) good thing of segfaults is that they leave a trace. So instead of focusing on seemingly random errors, we could at least try to get some useful information from the thousands of segfault logs.

How do you debug a segfault?

Short answer: it’s very difficult and almost impossible. As with any bug, the first thing you should try to do is reproduce the error. With these segfaults we never got to it, since they where so random. Besides, of all the information provided by a segfault, Ruby devs only understand the normal stack trace and that’s about it.

So unless you’re a C expert, you’re pretty much screwed. However, we can use the common wisdom of the masses. If you ever need to fix a segmentation fault, don’t waste your time debugging. These are almost always the solutions:

- It’s probably a bug in one of the gems that uses C extensions. Hope that somebody else had the problem first and they solved it. Therefore, update or remove all your gems with C extensions.

- If not, then it’s probably a bug in the Ruby interpreter. Update Ruby to the latest patchlevel.

- If you’re already at the latest patchlevel, then try the next version.

Updating gems

Thanks to the part of the segfaults that list the files and C extensions that were loaded, we compiled a list of possibly offending gems. Then we updated or removed all of these gems, and the segfaults stopped. Our update process pointed to a very outdated version of oj we were using. Oj is a fantastic gem that fixes bugs diligently, but we had not kept it up to date.

And now our exception tracker has been free of seemingly random exceptions!

Lessons learned

We learned many things, among them:

- Don’t try to understand a segfault. It’s almost never worth it.

- It’s really important to keep gems up to date.

- Things that don’t blow up loudly are very easy to ignore.

I’d like to thank New Relic for their support. Their contribution helped a lot, even when in the end it turned out to be a problem on our system.

In retrospect the solution seems so obvious that it hurts. We’re very sorry to anyone who got a totally random error using Harvest. We take errors very seriously and we’re very glad that we can again focus on the errors in our application code.