I hope you slept well during July. Unfortunately, the Ops team here at Harvest did not. Harvest had a series of very short outages during July, all occuring at approximately the same time (12:30 a.m. ET). We still achieved 99.95% uptime for the month, but our availablity report for July shows all of these events (too many for our liking). I want to apologize to customers who were affected by these events.

The summary is that an overloaded, and incorrectly designed logging system was impacting Harvest application requests when a nightly archive job ran. For others who have implemented rsyslog and the RELP protocol, I urge you to be careful about the potential for blocking applications which use the syslog service. Read on for the details.

The problem begins

At 12:31 a.m on July 2nd, we received our first notification that some requests were timing out. By the time I looked at the situation on the servers, we were many thousands of log entries past the event and things were back to normal. Odd. I assumed at the time that a brief network issue had degraded performance between two components of our stack, and that the issue was between the app servers and the database servers. This was pure conjecture, since no logs showed any evidence supporting this theory, or any other theory I was able to formulate.

A similar incident occurred on July 9th, then again on July 16th, both around the same time. Each time the event occurred, we would see a large number of Rails requests become very slow, and our available pool of Passenger processes for inbound requests would be depleted, causing some customer requests to receive errors.

On the night of July 16th, 2013, I bade farewell to sleep and proposed to caffeine as I turned my Harvest cap backwards.

Searching for evidence

For a few nights, I was logging in to our systems to watch for this event in action, looking for its genesis. This watched pot did not boil, and the issue never occurred during the 3 nights I was watching for it.

Developers and ops folks reading this post are probably rolling their eyes. Logs and performance metrics exist to find these patterns. It should be simple to find the issue, right?

Right. But also wrong.

At this point, we knew:

- The event lasts for a few minutes (3–7 minutes).

- The timeframe of the event was predictable to within 10 minutes.

- Most dynamic requests would become slow, then suddenly recover.

- The load balancer logs showed us how slow the requests were (some greater than 15 seconds, which is an aeon).

- The Rails logs, on the other hand, indicated that requests were fast once processed.

- This many slow requests was exhausting our application server resources and we couldn’t queue further requests for processing. Customers saw errors at this point.

On July 23rd, I was able to watch the issue live for the first time. As request times increased, I was profiling the performance of network traffic between components and watching every metric I could for the smoking gun. I had a bunch of systems utilities logging their data so I could review the open network connections for Harvest’s backend services. I was extracting performance numbers from streaming application logs and was hoping for something to be glaring at me in the details. Nothing. The issue only lasted for 3 minutes while I was watching it. We then recovered in the space of a second, and that was it.

Without a smoking gun, we had to invent theories. The first theory was that the issue was caused by an external service that becomes slow for a short interval.

The first external service we (incorrectly) looked at was Skylight, which Harvest uses to log performance data. We spoke with Carl, explained our issue, and asked for his thoughts. A patient and wise man, Carl analyzed all the Harvest data on their end and concluded that although Harvest was indeed slow during these intervals, the way that Skylight is implemented would not cause this issue. We decided to remove Skylight temporarily to be sure, and on July 25th, without Skylight being deployed, we saw the issue recur. Skylight was not the culprit. Thanks to Carl and the Skylight crew for entertaining our theories.

At this point, I asked our excellent data center providers, Server Central, to perform a network traffic analysis to determine if there was a pattern of network traffic anywhere at all during the outage windows, or if some network path was being saturated and degrading performance. The team at Server Central really stepped up and did an exhaustive check of all of our network traffic and determined that nothing untoward was happening during the time we were seeing the issue. (If you are looking for a place to host your servers, you should talk to these guys.)

So now we have Rails logs that say things are fast, load balancer logs that say things are slow, an external performance analysis service which agrees with the load balancers, and a predictable window for the issue.

We split our global pool of application servers into a few different function-based pools. The theory was that if the problem was exhibited in only one of the pools, that we would have narrowed down the possible scope of the issue somewhat. However, the last problem window had occured among all of the pools, so it wasn’t isolated to one area of Harvest.

Narrow down the scope of the investigation

We had to decide at this point if we believed the issue was caused by inbound requests which were doing something unexpected, or if the issue was caused by something on our end which was performing poorly and then recovering. We started our investigation on the former.

Harvest handles a lot of traffic. We also have a lot of hardware focused on serving this traffic. This means two things: first, there is a lot of data to analyze if you need to analyze our traffic data in a new way; second, it means that if this issue was being caused by external requests that there would have to be a very large number of them to have this kind of effect.

We spent a couple of hours looking at our traffic logs, filtering them in various ways, trying to find a large number of requests isolated in the problem timeframe and looking like they could have wide ranging performance effects. We didn’t find anything.

In order to concentrate our energy, we decided to abandon the idea that the problem was caused by inbound traffic and focus our efforts on searching for an anomaly in our systems.

Working through possible theories

I set out to collect and systematically debunk any theories we could come up with, no matter how absurd they sounded. I drew up a list of 10 theories to explore and debunk.

Was memcache becoming slow for some reason? No. Was MySQL becoming slow for some reason? No. Was nginx encountering a bug somewhere and logging the evidence? No. And so on, through five or six different theories. Next on the list: was the problem caused by a Passenger bug?

If you use Passenger, you are no doubt familiar with using a SIGABRT signal to force Passenger to log a backtrace to the Rails log. Testing this procedure, I was unable to locate the backtrace data in our Rails logs. I asked Hongli Lai over at Phusion to help me find this data. We even arranged for Hongli to be available as a consultant to help us troubleshoot the problem if required. Hongli was very gracious and I would like to thank him for his willingness to help.

Trying to force the Passenger backtrace into the Rails log ultimately led me to the cause of the problem, though it wasn’t a Passenger bug. We use rsyslog to send our Rails logs from each application server to central log servers where various tools parse and analyze the application logs. The Passenger backtraces were not appearing in the Rails logs, and I was unsuccessfully searching every possible log location for them.

At the same time, we were doing yet another fine grained audit of every single scheduled task which gets run across the entire Harvest architecture, looking for a scheduled task near the outage window which could possibly cause the problem.

Finally, the smoking gun

I noticed a harmless looking cron job responsible for archiving old log files on one of our rsyslog servers. It ran every day a few minutes before the window. Looking at the system metrics for this log server, I noticed increased levels of IOwait while this archiving ran through the outage window.

When we implemented rsyslog we had chosen to use the RELP protocol, because we wanted maximum log reliability. We had assumed at the time that the impact of any rsyslog performance or availability issues would be contained by the rsyslog subsystem, not spread to the applications which were using the syslog facility. This turns out to have been naïve.

We found some reports of other folks having similar experiences with rsyslog/RELP. Performance issues could easily lead to rsyslog local queue exhaustion, which could lead to applications blocking while waiting on the syslog facility.

At last, a credible theory.

I readied a move away from TCP/RELP towards UDP for rsyslog traffic in Chef and deployed it. There was a syntax bug in our config which immediately proved our theory to be correct. While rsyslog messages were being queued locally because of the syntax bug, Passenger processes began to pile up as Rails requests blocked on calls to syslog. This was the exact behavior we would see during the mysterious outage windows.

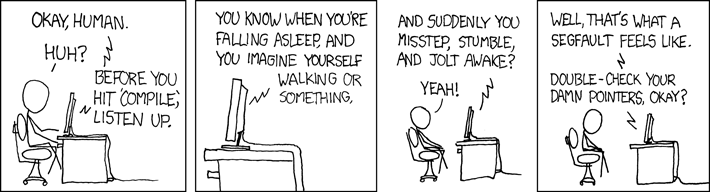

The problem that we were struggling to diagnose because our application logging didn’t confirm the existence of the problem turned out to be the application logging itself.

Fixing the design

We ended up moving back to using RELP in rsyslog, but instead of Rails using the syslog facility directly, Rails now writes logs to a local file on the filesystem and rsyslog uses this text file as an input. Rails logs are now shipped to the central logging servers in a way which is decoupled from Rails entirely. This way, any future rsyslog performance issues should be contained within rsyslog and not impact our application.

The mystery finally solved, caffeine and I took some time apart. (We have since gotten back together.)

I’m not proud of how long it took us to find the problem, and I am really not proud of the number of events on our uptime report during the time it took us to discover the cause. I am very proud of the team that helped troubleshoot this issue, though. Harvest has an amazing team who are good at working under pressure.

Like a great joke, or a visionary piece of technology, it seems obvious once you see it. Someone had to see it for the first time, though.

Thanks for being patient while we figured this one out.